Your location:Home >Automotive News >

Time:2022-06-30 13:17:41Source:

Andrea Benucci of the RIKEN Brain Science Center, a comprehensive research institute in Japan, and colleagues have developed a method for creating artificial neural networks that can learn to recognize objects faster and more accurately,media reported.The study focused on unnoticed eye movements and said eye movements play an important role in stably recognizing objects.The findings could be applied to machine vision, for example, to make it easier for self-driving cars to learn to recognize important features on the road.

Image source: RIKEN

The head and eyes are constantly moving throughout the day, but humans do not see objects clearly because of this, even though the physical information on the retina is constantly changing.What makes this perceptual stability possible are neural copies of motor commands.These copies are sent to the entire brain with each movement and are thought to allow the brain to interpret its own movements and keep perception stable.

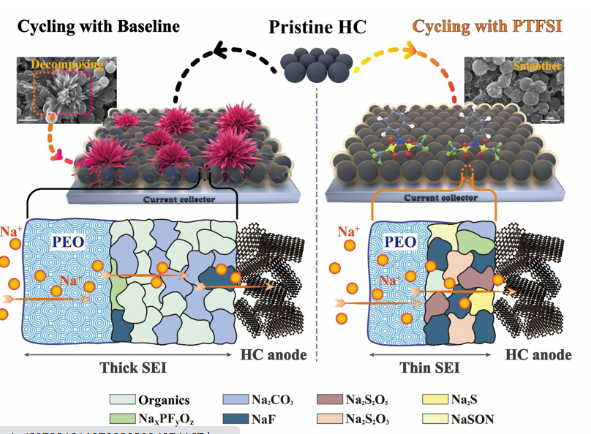

In addition to stable perception, there is evidence that eye movements and their replicas may also contribute to stable recognition of objects in the world, but how exactly this happens is unknown.Benucci has developed a convolutional neural network (CNN) that optimizes the classification of objects in visual scenes as the eyes move, potentially addressing the above problem.

First, the neural network was trained to classify 60,000 black and white images into 10 categories.While the network performed well on these images, its performance dropped sharply to chance levels when tested with shifted images that simulate visual input that naturally changes as the eye moves.However, after training the network with shifted images, the classification improved significantly as long as the direction and magnitude of the eye movements that caused the shift were also included.

In particular, adding eye movements and their replicas to the network model, the system is better able to cope with visual noise in images."This advance will help avoid dangerous errors in machine vision," Benucci said. With more efficient and powerful machine vision, pixel changes (also known as 'adversarial attacks') reduce error rates, for example, in autonomous driving Cars mark stop signs as light poles, or military drones misclassify hospital buildings as enemy targets."

Benucci explained: "Imitating eye movements and their outgoing copies means 'forcing' the machine vision sensor to have a controlled type of movement, while informing the visual network responsible for processing images related to spontaneous movement, making machine vision more powerful, and similar to to human visual experience.”

Statement: the article only represents the views of the original author and does not represent the position of this website; If there is infringement or violation, you can directly feed back to this website, and we will modify or delete it.

Preferredproduct

Picture and textrecommendation

2022-06-30 13:17:41

2022-06-30 13:16:48

2022-06-30 13:16:24

Hot spotsranking

Wonderfularticles

2022-06-30 13:14:35

2022-06-30 13:13:55

2022-06-30 13:11:02

Popularrecommendations